If you want to work with a large amount of unsorted and unlabeled data, there are some machine learning techniques that might be helpful for you. A common task in this area is the classification of texts. In this article, you'll get a basic idea of how this can be achieved using so called Naive Bayes Classifiers and how a simple document classification can be implemented in javascript.

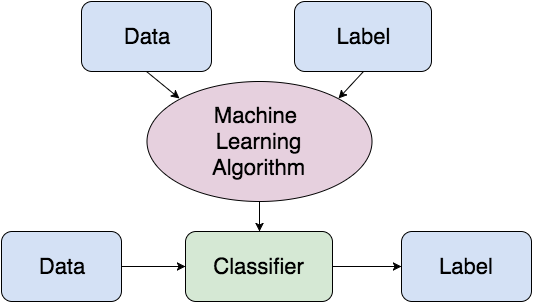

The general idea of supervised machine learning is that you train a system with labeled data. A machine learning algorithm is fed with the data in the training set. By training the system, a so called classifier is generated. Using this, the trained system is then able to classify unknown, unlabeled data based on the things it has learned.

A classifier that is very commonly used in text classification is the Naive Bayes Classifier. It is based on the idea of Bayes' Theorem, which is used to calculate conditional probabilities. The basic idea is to find out what the probability of a document belonging to a class is, based on the words in the text, whereas the single words are treated as independent features. The simple formula for the calculation can be written like this:

` P(C|W) = (P(W|C)*P(C))/(P(W)) `

- P(C|W) = How likely is it for the words W to belong to class C?

- P(W|C) = How likely is it to observe the words W in class C?

- P(C) = How likely is it for a document to belong to class C?

- P(W) = How likely do the words W appear in general?

A well written and detailed explanation of the Naive Bayes classifier by Sebastian Raschka can be found here.

Naive Bayes Classifiers can achieve good results for predicting if a document belongs to one category or another. Possible uses cases are for example:

- Which category (sports, politics, economics...) does a newspaper article belong to?

- Which author was a text written by?

- Is a message spam or not spam?

In this blog post, we'll have a closer look at the last example and set up a classifier that is able to distinguish between spam and not spam (ham) messages. To get started, the first thing we need is a training set of labeled 'spam' and 'ham' messages. Luckily, such a collection of classified SMS texts can be found here.

This set consists of 1002 ham and 322 spam messages and can be downloaded as .txt file. I brought the content into json format for easier use. As mentioned before, a training and a test set are needed. The training set consists of 600 elements (300 of each class) and the test set contains 44 elements (22 of each class).

const train = [{ text: 'Are you comingdown later?', label: 'ham' },{ text: 'Me too! Have a lovely night xxx', label: 'ham' },{text: 'Want explicit SEX in 30 secs? Ring 02073162414 now! Costs 20p/min',label: 'spam',},{text: 'You have won ?1,000 cash or a ?2,000 prize! To claim, call09050000327',label: 'spam',},//...];const test = [{ text: 'Thinking of u ;) x', label: 'ham' },{text: '449050000301 You have won a £2,000 price! To claim, call 09050000301.',label: 'spam',},//...];

There are some javascript libraries that can be used for classifying documents, for example:

We will have a closer look at Natural, which provides functions for various language processing tasks and will make use of Natural's

BayesClassifier.const natural = require('natural');classifier = new natural.BayesClassifier();

After creating the classifier, the data it should be trained with can be added using the function

addDocument(). This function takes a text and the correct label for that text as parameters. We can iterate over the training set and add each element to the classifier. After all the data was added, the classifier can be trained.for (let i = 0; i < train.length; i++) {classifier.addDocument(train[i].text, train[i].label);}classifier.train();

When the texts are added to the classifier, a preprocessing is done automatically. The string gets tokenized, which means it is split up into single words. From these words, all so called stop words are removed. Stop words are words that appear often and are therefore not very relevant for the content. Some examples of stop words would be "the", "a", "and"... Also, the words are transformed into their root form, which is called stemming. This preprocessing of the text is done by Nartural's

PorterStemmer using the function tokenizeAndStem().const stemmer = natural.PorterStemmer;stemmer.tokenizeAndStem('Someone has conacted our dating service and entered your phone because they fancy you!');// returns [ 'someon', 'conact', 'date', 'servic', 'enter', 'phone', 'fanci' ]

When the training is finished, the classifier can be used to predict the label of unknown data. This can be achieved with the

classify() function. The unlabeled text is passed as parameter and the predicted label is returned.let correctResults = 0;for (let i = 0; i < test.length; i++) {const result = classifier.classify(test[i].text);if (result === test[i].label) {correctResults++;}}console.log(`Correct Results: ${correctResults}/${test.length}`);// Correct Results: 44/44

And that's it! Using our training and test sets, 100% of the test data could be classified correctly. To give you an impression of what else can be done with this simple technique, we created a tool that is able to classify tweets by the US presidental candidates Hilary Clinton, Bernie Sanders and Donald Trump, which you can find and play around with here.

Further Reading