This article was originally published on datadrivenjournalism.

TL;DR: You can find the script we used to generate the MIDI file in our from-data-to-sound repository.

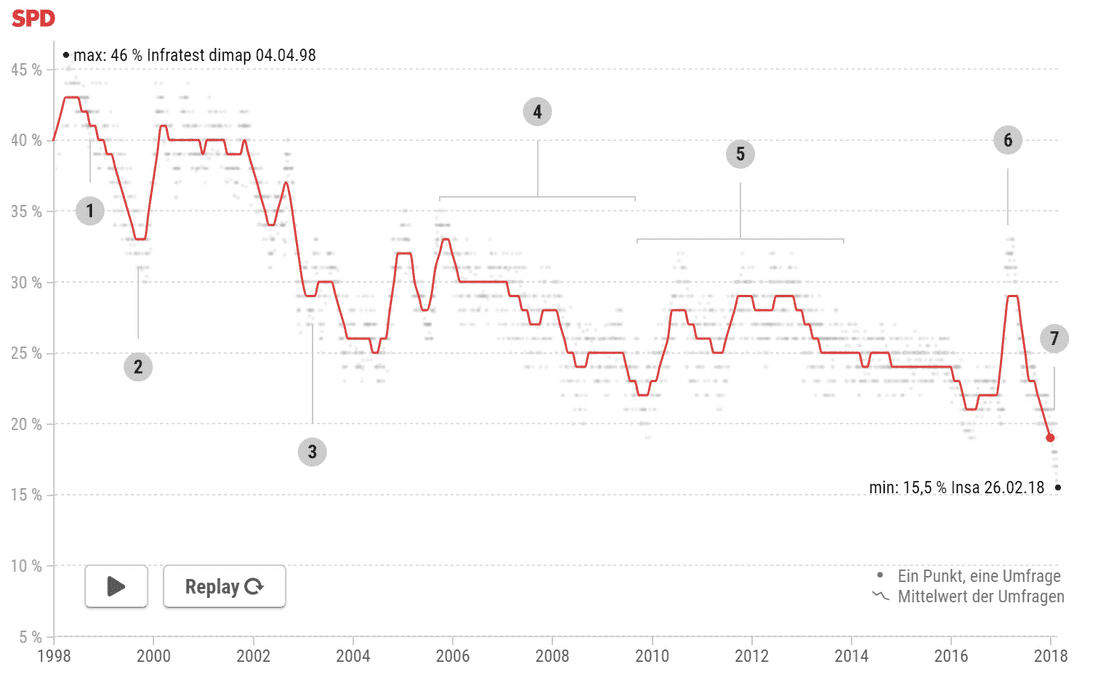

In Berliner Morgenpost’s recent project, Der Sound zum tiefen Fall der SPD, the interactive team created the newsroom’s first sonification (the process of converting data into sound). Our data driven composition reflects 20 years of the Social Democratic Party of Germany (SPD)’s polling data, scraped from wahlrecht. To complement the musical track, we also created a data visualisation that moves in line with our sonification and highlights the SPD’s falling popularity.

In this post, I will explain how we generated an audio file from data and put a sound to the deep fall of the SPD.

Should we do a sonification at all?

In our case, we wanted to come up with a new kind of interactive to explore a dataset that our readers have probably already seen. Our aim was to increase our readers’ (or, in this case, our listeners’) interest in the SPD’s position. Since their polling results are constantly going down, we imagined that this would be a sound pattern that audiences would easily understand as it represents the bad situation that the SPD is in right now.

How to get started?

Before we started developing the project, we checked out other sonification examples and explanations. A common method is to use an oscillator and adjust the frequency depending on the current value. Hearing ranges go from 20 to 20k Hz, so there are ~20k frequencies to map your data to frequencies. We tried tone.js to dynamically generate sounds based on the data. It worked well, but we didn't like the result. The sound was too artificial and very annoying. We only had a small range of values (between 18 % and 43 %) so we decided to use notes to represent our values and use a piano to generate a more natural sound.

Finding the right scale

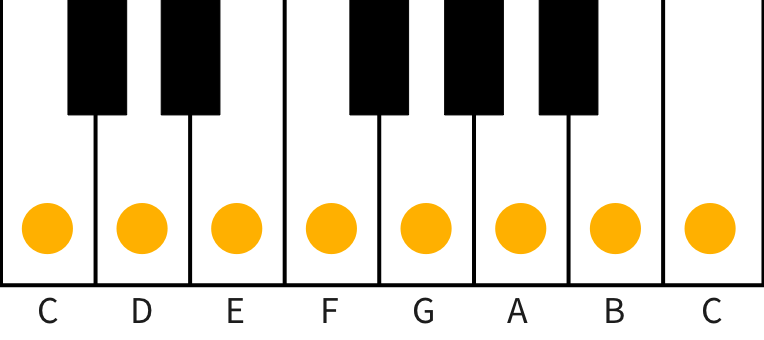

If you want to map your data to notes you need to decide on a scale to use. Our first idea was to use a classic "C major" scale because it sounds nice and most of our readers should be used to it:

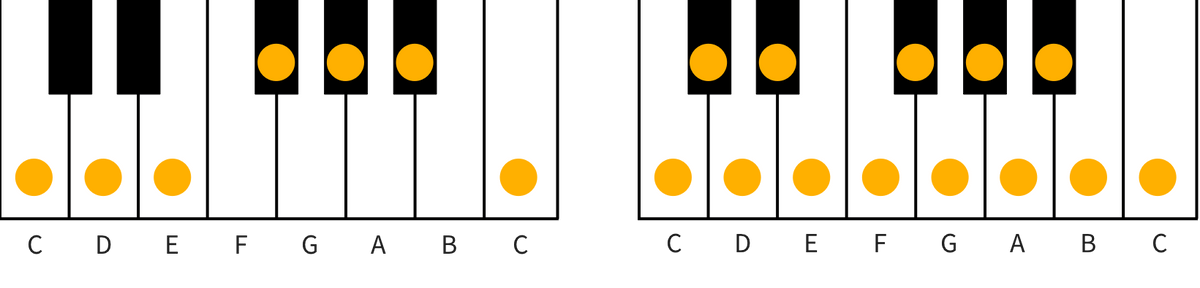

But unfortunately major and minor scales doesn't work well for sonifications. They include whole tone and half tone steps, so the distance between the steps is not equal. As seen in the image above, there is a half tone step between "E" and "F". This means that the frequencies of these notes are closer than the ones between "C" and "D", for example, which is a whole tone step. If you were to map a "1" to "C", a "2" to "D" and so on, the distances between the values wouldn't match the distance between the generated tones. The solution is to use a whole tone scale, which consists only of whole tone steps, or a chromatic scale, which consists only of half tone steps. In both of these scales, all notes have an equal distance so you can use them to safely represent your data.

A large piano has 88 keys. If you want to create a natural sound you could sonify a data set with a value range of 88 for a chromatic scale, or a range of ~40 for a whole tone scale. For our application, we selected a whole tone scale because the differences between the values are better heard and we only had a range from 25.

From data to notes

In order to keep the project simple, we decided to create a MIDI file so that we could then use a music production software like LMMS, GarageBand or onlinesequencer.net to find the right instrument and do some fine tuning like adjusting the length of the notes. To create the MIDI files, I found a great Node.js library called Scribbletune. It's well documented and easy to use. Another possible method would have been to play the sounds programmatically with a library like tone.js.

🎵 Creating the MIDI file

You can find the whole script in our from-data-to-sound repository. For our application, we used a whole tone scale with "C" as the base tone ("C", "D", "E", "Gb1", "Ab1" and "Bb1"). With Scribbletune, you can define which "C" you want to play with a number suffix like "C0" for a deep "C" or "C7" for a very high one. We wanted to start at "C1" for the minimum value 18 and then go all the way up so that deeper notes represent bad poll results and higher notes represent better ones.

const scribble = require('scribbletune');// example dataconst data = [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 10, 10, 9, 8, 7, 6, 5, 4, 3, 2, 1];const octaves = [...Array(5)].map((d, i) => i + 1); // [1, 2, 3, 4, 5];const notes = octaves.reduce((result, octave) =>result.concat(scribble.scale('c', 'whole tone', octave, false)),[]);

As you can see, we used Scribbletune’s

scale function. The function returns an array with notes depending on the passed parameters. In each loop we used a higher octave in order to build a whole tone scale over five octaves. The notes array therefore contains the following values:['c1','d1','e1','gb1','ab1','bb1','c2','d2','e2','gb2','ab2','bb2','c3','d3','e3','gb3','ab3','bb3','c4','d4','e4','gb4','ab4','bb4','c5','d5','e5','gb5','ab5','bb5',];

We then mapped over our data and created the MIDI data with help of the

clip function:const min = Math.min(...data); // this is 18 in our caseconst midiData = scribble.clip({notes: data.map((d) => notes[d - min]),pattern: 'x',noteLength: '1/16',});

Finally, we wrote the MIDI file 🎉:

scribble.midi(midiData, 'data-sonification.mid');

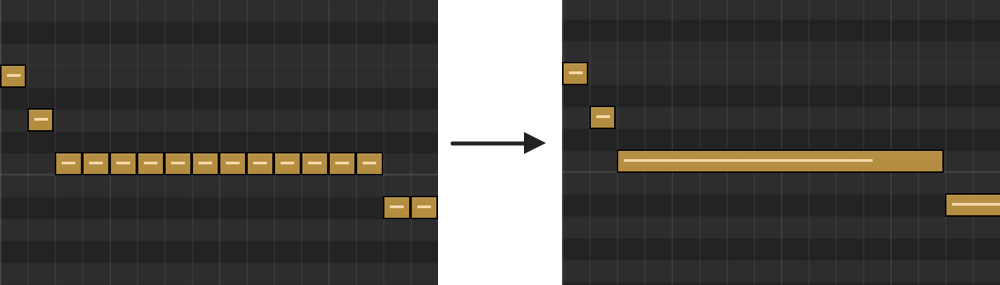

After we generated the file, we adjusted the notes manually. We wanted to hold a note if it didn’t change in order to make the sound less stressful.

The last step was to connect the MIDI signal with an instrument and export it as a MP3. With onlinesequencer.net you can easily adjust the MIDI file and then you could use Solmire for exporting the MP3.

Any questions?

Further Reading