Data Analysis

– April 16, 2015

Visualizing data with Elasticsearch, Logstash and Kibana

Christopher Möller

@chrtze

When designing visualizations, we sometimes have to deal with bigger datasets than standard tools can handle. For example, Microsoft Excel has a limit of roundabout 1 million rows - for web-based tools, its often even worse.

If you have files that exceed this limit, you can consider the usage of a database which can easily handle big datasheets. But also for smaller datasets, the following techniques enable you to create interactive visualizations within a small amount of time.

In this example, I am using Logstash, Elasticsearch and Kibana to create an interactive dashboard from raw data. You actually do not need to have deep knowledge in programming. If you know how to execute commands in the Terminal, you won't have problems following these steps.

The ELK Stack

ELK stands for Elasticsearch Logstash and Kibana which are technologies for creating visualizations from raw data.

Elasticsearch is an advanced search engine which is super fast. With Elasticsearch, you can search and filter through all sorts of data via a simple API. The API is RESTful, so you can not only use it for data-analysis but also use it in production for web-based applications.

Logstash is a tool intended for organizing and searching logfiles. But it can also be used for cleaning and streaming big data from all sorts of sources into a database. Logstash also has an adapter for Elasticsearch, so these two play very well together.

Kibana is a visual interface for Elasticsearch that works in the browser. It is pretty good at visualizing data stored in Elasticsearch and does not require programming skills, as the visualizations are configured completely through the interface.

Choosing a dataset

The first thing you need is some data which you want to analyze. As an example, I have used historical data of the Apple stock, which you can download from Yahoo's historical stock database. Below you can see an excerpt of the raw data.

Date,Open,High,Low,Close,Volume,Adj Close2015-04-02,125.03,125.56,124.19,125.32,32120700,125.322015-04-01,124.82,125.12,123.10,124.25,40359200,124.252015-03-31,126.09,126.49,124.36,124.43,41852400,124.432015-03-30,124.05,126.40,124.00,126.37,46906700,126.372015-03-27,124.57,124.70,122.91,123.25,39395000,123.252015-03-26,122.76,124.88,122.60,124.24,47388100,124.242015-03-25,126.54,126.82,123.38,123.38,51106600,123.382015-03-24,127.23,128.04,126.56,126.69,32713000,126.69...

I chose this dataset because it does illustrate a common use case for datavisualization. You will see later that we have to clean the data a bit for making it usable with Kibana. So, with this dataset we can cover all the steps you will need for other datasets:

- data-cleaning

- streaming the data into Elasticsearch

- creating visualizations using Kibana

Run Elasticsearch

Before we can insert some data, Elasticsearch must be running on your machine. If you didn't already, you can download it here. Once it is downloaded and extracted, you can start it from the command line via:

$ cd path/to/elasticsearch$ bin/elasticsearch

You should see some info output. This means that the database is now up and running and waiting for input or queries. For the next steps, open another terminal session and leave Elasticsearch running in the background.

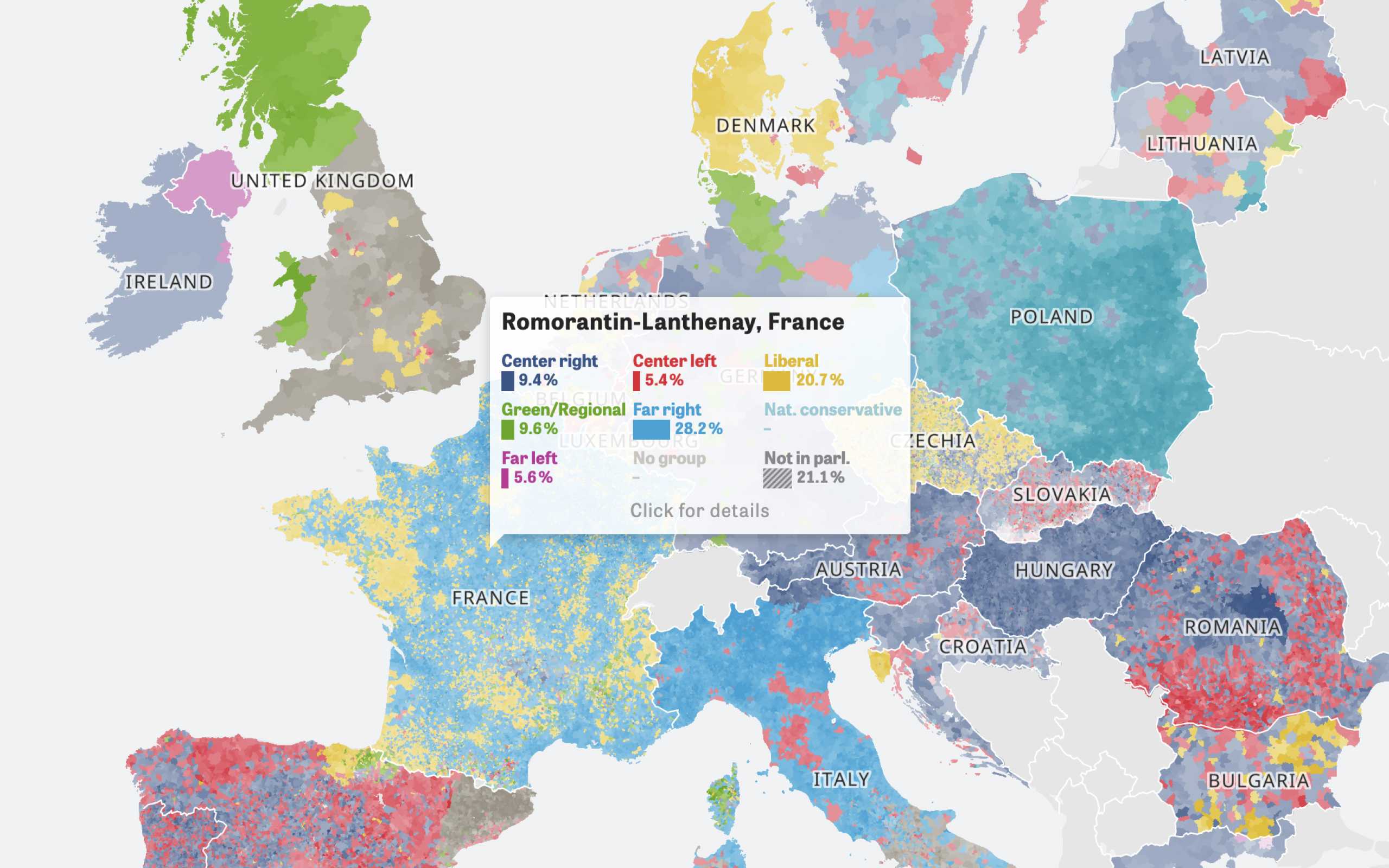

Colors Of Europe

Interactive Data Visualization (Zeit Online)

Are you interested in a collaboration?

We are specialized in creating custom data visualizations and web-based tools.

Insert the data

Now we have to stream data from the csv source file into the database. With Logstash, we can also manipulate and clean the data on the fly. I am using a csv file in this example, but Logstash can deal with other input types as well.

If you haven't done so yet, download Logstash. Like Elasticsearch, it doesn't require an installation. You can simply run it from the command line.

For our task, we have to tell Logstash where it gets the data from, how the data should be transformed and where the data should be inserted. This is done with a simple config file which you can write using a text editor of your choice. The first thing to configure is the data source. I am using the file input in this case. The input section of our configuration file looks like this:

input {file {path => "path/to/data.csv"start_position => "beginning"}}

Explanation:

With the input section of the configuration file, we are telling logstash to take the csv file as a datasource and start reading data at the beginning of the file.

Now as we have logstash reading the file, Logstash needs to know what to do with the data. Therefore, we are configuring the csv filter. If you are using another data type, you can check out other filters like json or xml. Additionally, we convert all fields from the csv file to a numeric data type (float). This is required for being able to visualize the data later. If you have a more "dirty" dataset, you can use other filters to clean the data, for example the date filter for parsing dates or the mutate filter to lowercase the string in a field.

filter {csv {separator => ","columns => ["Date","Open","High","Low","Close","Volume","Adj Close"]}mutate {convert => ["High", "float"]}mutate {convert => ["Open", "float"]}mutate {convert => ["Low", "float"]}mutate {convert => ["Close", "float"]}mutate {convert => ["Volume", "float"]}}

Explanation:

The filter section is used to tell Logstash in which data format our dataset is present (in this case csv). We also give the names of the columns we want to keep in the output. We then converting all the fields containing numbers to float, so that Kibana knows how to deal with them.

The last thing is to tell Logstash where to stream the data. As we want to stream it directly to Elasticsearch, we are using the Elasticsearch output. You can also give multiple output adapters for streaming to different outputs. In this case, I have added the stdout output for seeing the output in the console. It is important to specify an index name for Elasticsearch. This index will be used later for configuring Kibana to visualize the dataset. Below, you can see the output section of our logstash.conf file.

output {elasticsearch {action => "index"host => "localhost"index => "stock"workers => 1}stdout {}}

Explanation:

The output section is used to stream the input data to Elasticsearch. You also have to specify the name of the index which you want to use for the dataset.

Thats all. As you can see, you only have to write one config file, which does all the stuff for you: reading the data, transforming it, manipulating it and inserting it. You can check out the whole config used in the example here.

The final step for inserting the data is to run logstash with the configuration file:

$ bin/logstash -f /path/to/logstash.conf

By default, logstash will wait for file changes on the input file so you can terminate the program after you see that all data is inserted.

Set up and run Kibana

Now as we have all the data inserted in our database, we are ready for visualizing it. Therefore I am using Kibana, which you can download from the elastic page.

Starting Kibana is as simple as with Elasticsearch and Logstash. Again, you can run it from the command line:

$ /bin/kibana

If everything works, a message gets printed to the console:

{"@timestamp": "2015-04-03T19:16:52.219Z","level": "info","message": "Listening on 127.0.0.1:5601","node_env": "production"}

Now everything is set up: We have Elasticsearch running, containing the data we inserted with Logstash and Kibana being ready for visualization.

Create Visualizations

First, point your web browser to

http://localhost:5601 where Kibana is running.As it says, we need to specify the name of the index which we want to use for our visualizations. Sticking to the example, enter the name of the index that was specified before when inserting the data with Logstash ("stock"). Kibana will then ask for a field containing a timestamp which it should use for visualizing time-series data. In our case, this is the "Date" field.

Now create the index pattern and chose "Visualize" from the top menu to create a new visualization. What we will do next is to create three different types of visualizations which we then bring together in one Dashboard.

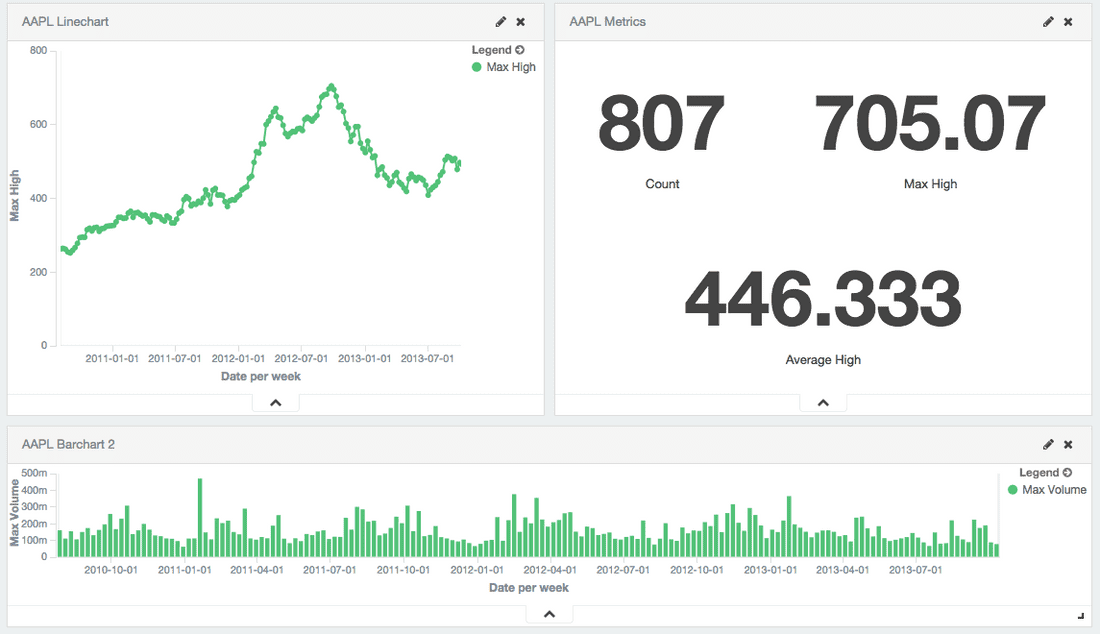

A Linechart Widget

One visualization I want to add to the dashboard later is a linechart showing the highest value of the stock for each day. To do that with Kibana, you first select the visualization type which is linechart in this case. Now we have to tell Kibana which data to use for the x- and y-axis. For the y-axis, we want to have the max value of the "High" field in our dataset. The x-axis is configured to be a date histogram showing the "Date" field in a daily interval.

After configuring the chart, you should see a line visualizing the development of the stock price over time. If you are happy with the chart, you should save it and give it a name to use it in a dashboard later.

A Barchart Widget

Another visualization we can create with the example dataset is a barchart showing the volume of the stock for each day. To do this, go back to the visualization page and select "Vertical Bar Chart" from the list.

Now you can configure the chart equal to the configuration of the linechart we have created before. I used another field for the barchart ("Volume") in this case to make the chart look different from the linechart.

A Metric Widget

Metric widgets in Kibana are quite useful, too. This type of visualization lets you display key values of the dataset and additionally perform basic calculations, such as calculating average or extent values.

The configuration of this widget is simple: You just have to tell Kibana which type of aggregation it should show and which field to use.

With the example dataset, I have created a metric widget that shows the "Count" (the number of items in the dataset), the "Max High" (the highest peak in the dataset) and the "Average High" (the average value of the High-field). The values for the metrics, and for the other visualizations as well, depend on the time frame that you can set in kibana. That means, that the numbers will dynamically update if you limit the time frame. So with this widget we can easily get a lot of information, for example showing the average stock price of the last X years compared to the highest price in that time frame.

Creating a Dashboard with the visualizations

The visualizations we have configured and saved can be used together in a dashboard. To create a new dashboard, navigate to the "Dashboard" section in Kibana. You can now add the visualizations to the dashboard using the "+" icon in the upper right corner.

You can drag and resize the widgets as you like to customize the dashboard to your needs. If everything looks good, you can start playing around with the data by zooming the charts or selecting a different time range.

update 2015-05-26: removed duplicate entry in logstash config. Thanks, @iinquisitivee

Further Reading